Industries

Understanding shelf companies and shell companies In our world of business challenges with revenues level or trending down and business loans tougher than ever to get, “shelf” and “shell” companies continue to be an easy option for business opportunities. Shelf companies are defined as corporations formed in a low-tax, low-regulation state in order to be sold off for its excellent credit rating. Click on the internet and you will see a plethora of vendors selling companies in a turn-key business packages. Historically off-the-shelf structures were used to streamline a start-up, where an entrepreneur instantly owns a company that has been in business for several years without debt or liability. However, selling them as a way to get around credit guidelines is new, making them unethical and possibly illegal. Creating companies that impersonate a stable, well established companies in order to deceive creditors or suppliers in another way that criminals are using shelf companies for fraudulent use. Shell companies are characterized as fictitious entities created for the sole purpose of committing fraud. They often provide a convenient method for money laundering because they are easy and inexpensive to form and operate. These companies typically do not have a physical presence, although some may set up a storefront. According to the U.S. Department of the Treasury’s Financial Crimes Enforcement Network, shell companies may even purchase corporate office “service packages” or “executive meeting suites” in order to appear to have established a more significant local presence. These packages often include a state business license, a local street address, an office that is staffed during business hours, a conference room for initial meetings, a local telephone listing with a receptionist and 24-hour personalized voice mail. In one recent bust out fraud scenario, a shell company operated out of an office building and signed up for service with a voice over Internet protocol (VoIP) provider. While the VoIP provider typically conducts on-site visits to all new accounts, this step was skipped because the account was acquired through a channel partner. During months one and two, the account maintained normal usage patterns and invoices were paid promptly. In month three, the account’s international toll activity spiked, causing the provider to question the unusual account activity. The customer responded with a seemingly legitimate business explanation of activity and offered additional documentation. However, the following month the account contact and business disappeared, leaving the VoIP provider with a substantial five figure loss. A follow-up visit to the business showed a vacant office suite. While it’s unrealistic to think all shelf and shell companies can be identified, there are some tools that can help you verify businesses, identify repeat offenders, and minimize fraud losses. In the example mention above, post-loss account review through Experian’s BizID identified an obvious address discrepancy – 12 businesses all listed at the same address, suggesting that the perpetrator set up numerous businesses and victimized multiple organizations. It is possible to avoid being the next victim and refine and revisit your fraud best practices today. Learn more about Experian BizID and how to protect your business.

According to the latest Experian-Oliver Wyman Market Intelligence Report, mortgage originations increased 25% year over year in Q1 2015 to $316 billion.

Soaring in the solar energy utility market By: Mike Horrocks and Rod Everson The summer is a great time of the year - it kicks off summer and the time to enjoy the sunshine and explore! It is also for me the recognition that days now are only getting shorter and makes me think about my year goals and am I going to hit them. In this spirit of kicking off summer, I thought I would talk about three opportunities that the utility vertical could and should take advantage of. 1. The future of Solar Photovoltaics (PV) is just getting brighter A recent study called out an expected 25 percent jump in Solar PV installs over the previous year. This is jump is just another in a long line of solar install records. While the overall cost of these installs has dropped, one must ask whether the accessibility is there for everyone. The answer is not yet. A potential opportunity may come in the form of community solar as an advantage over rooftop solar. This scenario involves a utility installing an array of PV cells and then carving out a specific cell for an individual residential customer for lease, crediting his or her bill at a percentage of the cost. 2. Generations are bringing change Just as spring gives way to summer, summer will give way to fall. The same is true in the utility markets on many fronts. At a larger infrastructure scale, utilities have to think about the kind of plants and capital investments they want to make. Another report indicated that 60,000 megawatts of coal energy is going to be retired over the next four years. This obviously will change the capital decision making functions in the industry. At a more personal level, however, there are changes in the consumers and their behaviors as well. Are those changes being accounted for in your organization? Is the next generation of consumers and the products and services it will demand being formulated in your strategy? How will you identify those consumers and secure them as customers? For example, while electrical energy consumption has been decreasing, what would be the impact if there was a revolution in battery technology? What if charging an electric automobile battery became as fast as filling a tank of gas? What if the battery gave you the same mileage range as a tank of gas and did it at a lower cost per mile? Would electric usage spike? 3. Blackouts happen; be prepared The best-laid plans sometimes still cannot account for those acts of God that cause disruptions to the grid. Blackouts happen, and if you don’t have flashlights with new batteries, you will be left in the dark. The same uncertainty is inevitable in the utility vertical. In the 2015 PwC Power and Utility Survey, 3 percent of the respondents said that there would be minor disruptions in business models, with the rest saying the disruptions would range from moderate to very disruptive. In fact, more than 47 percent of respondents said the changes would be very disruptive. What kind of flashlight-and-fresh-batteries strategy will you employ when the lights go out? Are your decision strategies and risk-management practices based on outdated solutions or approaches? Consider whether your business can take advantage of these situations. If you’re not sure, let’s set aside some time to discuss it, and I can share with you how Experian has helped others. There are still many sunny days ahead, but act now before the seasons change and you and your strategies are left out in the cold.

Some of my fondest memories on road trips as a child were the games we were able to play. I’m sure many kids played “I Spy” and did “Sing-a-longs,” but my go-to game was “Slug Bug” (It’s a game where you get points for spotting a Volkswagen Beetle). While it’s been quite some time since I’ve played the game, I still find myself very aware of the different types of vehicles around me. As a matter of fact, if I were to play the game today, I’d probably rack up a number of points for the amount of cross-over utility vehicles (CUVs) I’ve seen on the road lately. There are quite a few. After reviewing Experian Automotive’s most recent Market Trends and Registration analysis, it all made sense. During the first quarter of 2015, the entry-level CUV was the top new registered vehicle segment, up 6.3 percent from a year ago. It also marked the fifth consecutive quarter that the entry-level CUV was the top-selling new vehicle segment. It was followed by the small economy car and a full-sized pickup truck. The analysis also found that it wasn’t just the CUV that saw an uptick in new registrations. In fact, seven of the top 10 new registered vehicle segments saw increases in sales from a year ago, and 16.6 million new vehicles overall found their way onto U.S. roads in the first quarter of 2015. The spike in new registrations combined with fewer vehicles going out of operation drove the number of vehicles on the road to nearly 253 million, its highest level since the second quarter of 2008. As CUVs continue to stand on top of the mountain of new vehicle sales, and small economy cars sprint pass the full-sized pickup truck, you might think similar patterns have emerged in the overall number of vehicles on the road. But it’s not necessarily the case. Despite falling to the third most purchased new vehicle segment, full-sized pickup trucks remain the most popular vehicle on the road, making up roughly 15 percent of the market. That said, entry-level CUVs have seen the most dramatic increase, rising 12.2 percent from a year ago. Trends in the automotive market can sometimes appear to be cyclical, which is why it’s important for the industry to pay close attention to the data sets available to them. By leveraging the data, dealers, retailers, and manufacturers can benefit from the insights to make better business decisions, whether it’s relocating inventory or adapting to consumer demand. Similarly, identifying what vehicles consumers are driving, can do more than help you win in “Slug Bug,” it can help you win in the market.

Nowadays, whenever you hear news about the automotive industry, a negative tone tends to pop up. Whether it’s the increase in lending to subprime consumers, or the lengthening in loan terms, the stories lead one to believe that the industry is headed toward another “bubble.” However, that’s not necessarily the case. When we look at the data, the automotive finance market actually demonstrates a strong industry as a whole. Yes, it’s true that subprime lending is up. But, lending has increased across all risk tiers. In fact, loans to super prime consumers have actually seen the largest increase in volume compared to last year, approximately 8.5 percent. To take it a step further, the market share of loans to subprime consumers has decreased from a year ago. At its bare bones, what it means is that consumers are not only purchasing cars, but they are taking out loans to do so. Furthermore, given the percentage of loans extended to each risk tier, we see that lenders have not opened up their portfolios to increased risk. Both of which are positive indications of a strong market. We’ve also seen a steady increase in the length of loan terms. However, before anyone comes to any rash conclusions, it’s not as ominous a sign as it may seem. As cars and trucks have become more expensive to purchase, the easiest way for consumer to keep their monthly payments affordable has been to extend the life of their loans. That said, it’s critical for consumers to understand that by taking out a longer loan, they may need to hold onto the vehicle longer to avoid facing negative equity should they trade it in after a few years. An alternate route many consumers have taken to keep their monthly payments affordable has been leasing. In the first quarter of 2015, we saw leasing account for 30.2 percent of all new financed vehicles – its highest level on record. At the end of the day, consumers are continuing to purchase vehicles and that’s a positive sign for the industry. By gaining a deeper understanding of current automotive financing trends, lenders will be able to use the data and insights to their benefit by better meeting the needs of the marketplace and mitigating the risk of their portfolios. And if they do that, the good times can continue to roll for the industry.

Apple eschewed banks for a retailer focus onstage at their Worldwide Developers Conference (WWDC) when it spoke to payments. I sense this is an intentional shift – now that stateside, you have support from all four networks and all the major issuers – Apple understands that it needs to shift the focus on signing up more merchants, and everything we heard drove home that note. That includes Square’s support for NFC, as well as the announcements around Kohls, JCPenney and BJ’s. MasterCard's Digital Enablement Service (MDES) - opposite Visa’s Token Service - is the tokenization service that has enabled these partnerships specifically through MasterCard’s partners such as Synchrony – (former GE Capital) which brought on JCPenney, Alliance Data which brought on BJ’s, and CapitalOne which enabled Kohls. Within payments common sense questions such as: “Why isn’t NFC just another radio that transmits payment info?” or “Why aren’t retailer friendly payment choices using NFC?” have been met with contemptuous stares. As I have written umpteen times (here), payments has been a source of misalignment between merchants and banks. Thus – conversations that hinged on NFC have been a non-starter, for a merchant that views it as more than a radio – and instead, as a trojan horse for Visa/MA bearing higher costs. When Android opened up access to NFC through Host Card Emulation (HCE) and networks supported it through tokenization, merchants had a legitimate pathway to getting Private label cards on NFC. So far, very few indeed have done that (Tim Hortons is the best example). But between the top two department store chains (Macy’s and Kohls) – we have a thawing of said position, to begin to view technologies pragmatically and without morbid fear. It must be said that Google is clearly chasing Apple on the retailer front, and Apple is doing all that it can, to dig a wider moat by emphasizing privacy and transparency in its cause. It is proving to be quite effective, and Google will have to “apologize beforehand” prior to any merchant agreement – especially now that retailers have control over which wallets they want to work with – and how. This control inherits from the structures set alongside the Visa and MasterCard tokenization agreements – and retailers with co-brand/private label cards can lean on them through their bank partners. Thus, Google has to focus on two fronts – first to incentivize merchants to partner so that they bring their cards to Android Pay, while trying to navigate through the turbulence Apple has left in its wake, untangling the “customer privacy” knot. For merchants, at the end of the day, the questions that remain are about operating costs, and control. Does participation in MDES and VEDP tokenization services through bank partners, infer a higher cost for play – for private label cards? I doubt if Apple’s 15bps “skim off the top” revenue play translates to Private Label, especially when Apple’s fee is tied to “Fraud Protection” and Fraud in Private Label is non-existent due to its closed loop nature. Still – there could be an acquisitions cost, or Apple may plan a long game. Further, when you look at token issuance and lifecycle management costs, they aren’t trivial when you take in to context the size of portfolio for some of these merchants. That said, Kohls participation affords some clarity to all. Second, Merchants want to bring payments inside apps – just like they are able to do so through in-app payments in mobile, or on online. Forcing consumers through a Wallet app – is counter to that intent, and undesirable in the long scheme. Loyalty as a construct is tangled up in payments today – and merchants who have achieved a clean separation (very few) or can afford to avoid it (those with large Private label portfolios that are really ‘loyalty programs w/ payments tacked on’) – benefit for now. But soon, they will need to fold in the payment interaction in to their app, or Apple must streamline the clunky swap. The auto-prompt of rewards cards in Wallet is a good step, but that feels more like jerry rigging vs the correct approach. Wallet still feels very v1.5 from a merchant integration point of view. Wallet not Passbook. Finally, Apple branding Passbook to Wallet is a subtle and yet important step. A “bank wallet” or a “Credit Union wallet” is a misnomer. No one bank can hope to build a wallet – because my payment choices aren’t confined to a single bank. And even where banks have promoted “open wallets” and incentivized peers to participate – response has been crickets at best. On the flip side, an ecosystem player that touches more than a device, a handful of experiential services in entertainment and commerce, a million and a half apps – all with an underpinning of identity, can call itself a true wallet – because they are solving for the complete definition of that term vs pieces of what constitutes it. Thus – Google & Apple. So the re-branding while being inevitable, finds a firm footing in payments, looks toward loyalty and what lies beyond. Solving for those challenges has less to do with getting there first, but putting the right pieces in play. And Apple’s emphasis (or posturing – depending on who you listen to) on privacy has its roots in what Apple wants to become, and access, and store on our behalf. Being the custodian of a bank issued identity is one thing. Being a responsible custodian for consumer’s digital health, behavior and identity trifecta has never been entirely attempted. It requires pushing on all fronts, and a careful articulation of Apple’s purpose to the public must be preceded by the conviction found in such emphasis/posturing. Make sure to read our perspective paper to see why emerging channels call for advanced fraud identification techniques

Every time I turn on my television, look out my window or drive into the office, I always see hybrid or electric vehicles on the road. These days it seems like almost everyone is going green. With all the alternative-powered vehicles out there, you’d think that the market is simply booming, right? Would you believe me if I told you that the percentage of newly registered alternative-powered vehicles in 2014 actually declined from the previous year? It’s true. With this revelation, we actually took a deeper look into the alternative-powered vehicle market to see what else we can discover. Here’s what we found: Did you know that consumers who buy “Green” vehicles, purchase them in cash at a higher rate than those that buy more traditional models? Again, it’s a fact. The point is, there are many stereotypes and misnomers about alternative-powered vehicles, as well as the consumers who purchase them. But, just as there are hundreds of stereotypes, there also is an abundance of data to help confirm or reject them. At Experian, we’re committed to using our data for good by providing information into the market to help dealers, manufacturers and consumers better understand the environment we live in – whether we are talking broadly about what metal is moving or more specifically providing actionable insights into who is “going green”. For instance, consumers purchasing an alternative-powered vehicle tend to be a lower credit risk than those purchasing a traditional model. Nearly 83 percent of consumers who purchased a “Green” vehicle fell within the prime credit category, while the same could only be said for 71.5 percent of consumers who purchased gas-powered models. Additionally, of the top five alternative-powered vehicle models in 2014, three of them came from the Toyota family. The Toyota Prius and Prius C were in the top two, while the Camry was in the number four position. The Ford Fusion and Nissan Leaf made up the third and fifth spots, respectively. It’s these insights that enable the automotive industry and its consumers to take the appropriate action and make the best decisions for them. For consumers, gaining insight into the market allows them to paint a clearer picture of what options are most popular and available. For dealers and manufacturers, they are able to gain a better understanding of consumer demand and provide inventory that meets the needs of the market. The fact of the matter is, opportunity exists everywhere you look, you just have to know what you’re looking for. You can’t let preconceived notions or ideas dictate future decisions. By leveraging data and insight, the automotive industry is able uncover the unknowns and put itself in a good position to succeed, while helping consumers purchase vehicles that meet their specific lifestyle.

By: Mike Horrocks The other day in the American Banker, there was an article titled “Is Loan Growth a Bad Idea Right Now?”, which brings up some great questions on how banks should be looking at their C&I portfolios (or frankly any section of the overall portfolio). I have to admit I was a little down on the industry, for thinking the only way we can grow is by cutting rates or maybe making bad loans. This downer moment required that I hit my playlist shuffle and like an oracle from the past, The Clash and their hit song “Should I stay or should I go”, gave me Sage-like insights that need to be shared. First, who are you listening to for advice? While I would not recommend having all the members of The Clash on your board of directors, could you have maybe one. Ask yourself are your boards, executive management teams, loan committees, etc., all composed of the same people, with maybe the only difference being iPhone versus Android?? Get some alternative thinking in the mix. There is tons of research to show this works. Second, set you standards and stick to them. In the song, there is a part where we have a bit of a discussion that goes like this. “This indecision's buggin' me, If you don't want me, set me free. Exactly whom I'm supposed to be, Don't you know which clothes even fit me?” Set your standards and just go after them. There should be no doubt if you are going to do a certain kind of loan or not based on the pricing. Know your pricing, know your limits, and dominate that market. Lastly, remember business cycles. I am hopeful and optimistic that we will have some good growth here for a while, but there is always a down turn…always. Again from the lyrics – “If I go there will be trouble, An' if I stay it will be double” In the American Banker article, M&T Bank CFO Rene Jones called out that an unnamed competitor made a 10-year fixed $30 million dollar loan at a rate that they (M&T) just could not match. So congrats to M&T for recognizing the pricing limits and maybe congrats to the unnamed bank for maybe having some competitive advantage that allowed them to make the loan. However if there is not something like that supporting the other bank…the short term pain of explaining slower growth today may seem like nothing compared to the questioning they will get if that portfolio goes south. So in the end, I say grow – soundly. Shake things up so you open new markets or create advantages in your current market and rock the Casbah!

A behind-the-wheel look at alternative-power vehicles:

Tax return fraud: Using 3rd party data and analytics to stay one step ahead of fraudsters By Neli Coleman According to a May 2014 Governing Institute research study of 129 state and local government officials, 43 percent of respondents cited identity theft as the biggest challenge their agency is facing regarding tax return fraud. Nationwide, stealing identities and filing for tax refunds has become one of the fastest-growing nonviolent criminal activities in the country. These activities are not only burdening government agencies, but also robbing taxpayers by preventing returns from reaching the right people. Anyone who has access to a computer can fill out an income-tax form online and hit submit. Most tax returns are processed and refunds released within a few days or weeks. This quick turnaround doesn’t allow the government time to fully authenticate all the elements submitted on returns, and fraudsters know how to exploit this vulnerability. Once released, these monies are virtually untraceable. Unfortunately, simply relying on business rules based on past behaviors and conducting internal database checks is no longer sufficient to stem the tide of increasing tax fraud. The use of a risk-based identity-authentication process coupled with business-rules-based analysis and knowledge-based authentication tools is critical to identifying fraudulent tax returns. The ability to perform non-traditional checks that go beyond the authentication of the individual to consider the methods and devices used to perpetrate the tax-refund fraud further strengthens the tax-refund fraud-detection process. The inclusion of multiple non-traditional checks within a risk-based authentication process closes additional loopholes exploited by the tax fraudster, while simultaneously decreasing the number of false positives. Experian’s Tax Return Analysis PlatformSM provides both the verification of identity and the risk-based authentication analytics that score the potential fraud risk of a tax return along with providing specific flags that identify the return as fraudulent. Our data and analytics are a product of years of expertise in consumer behavior and fraud detection along with unique services that detect fraud in the devices being used to submit the returns and identity credentials that have been used to perpetrate fraud in financial transactions outside of tax. Together, the combination of rules-based and risk-based income-tax-refund fraud-detection protocols can curb one of the fastest-growing nonviolent criminal activities in the country. With identity theft reaching unprecedented levels, government agencies need new technologies and processes in place to stay one step ahead of fraudsters. In a world where most transactions are conducted in virtual anonymity, it is difficult, but not impossible, to keep pace with technological advances and the accompanying pitfalls. A combination of existing business rules based on authentication processes and risk-based authentication techniques provided through third-party data and analytics services create a multifaceted approach to income-tax-refund fraud detection, which enables revenue agencies to further increase the number of fraudulent returns detected. Every fraudulent return that is identified and unpaid, improves the government’s ability to continue to meet the demand for services by its constituents while at the same time strengthening the public’s trust in the tax system.

Source: IntelliViewsm powered by Experian Sales of existing homes dropped 50% from the peak in August 2005 to the low point in July 2010. The spike in home sales in late 2009 and early 2010 was due to the large number of foreclosure sales as well as very low prices. Since 2010, sales have increased to almost to the level they were in 2000, before the financial crisis. However, the homeownership rate has steadily gone down. How could sales have picked up while the homeownership rate declined? Investors have entered the market snapping up single family homes and renting them. Therefore, the recent good news in the existing home market has been driven by investors, not homeowners. But as I point out below, this is changing. Looking at the homeownership rate by age, shown in the table below, it is clear that since the crisis the rate has declined most for people under 45. The potential for marketing is greatest in this cohort as the numbers indicate a likely demand for housing. Homeownership Rate by Age Source: U.S. Census Bureau and Haver Analytics as reported on the Federal Reserve Bank of St. Louis Fred database The factors that have impeded growth, described above, are beginning to reverse which, along with pent-up demand, will present an opportunity for mortgage originators in 2015. Home prices have risen in 246 of the 277 cities tracked by Clear Capital.With prices going up, investors have begun to back away from the market, resulting in prices increasing at a slower rate in some cities but they are still increasing.Therefore the perception that homeownership is risky will likely change.In fact, in some areas, such as California’s coastal cities, sales are strong and prices are going up rapidly. Lenders and regulators are recognizing that the stringent guidelines put in place in reaction to the crisis have overly constrained the market.Fannie Mae and Freddie Mac are reducing down payment requirements to as low as 3%.FHA is lowering their guarantee fee, reducing the amount of cash buyers need to close transactions.Private securitizations, which dried up completely, are beginning to reappear, especially in the jumbo market. As unemployment continues to go down, consumer confidence will rise and household formation will return to more normal levels which result in more sales to first time homebuyers, who drive the market.According to Lawrence Yun, chief economist for the National Association of Realtors, “…it’s all about consistent job growth for a prolonged period, and we’re entering that stage.” The number of houses in foreclosure, according to RealtyTrac, has fallen to pre-crisis levels.This drag on the market has, for the most part, cleared and as prices continue to inflate, potential buyers will be motivated to buy before homes become unaffordable.Despite the recent increases, home prices are still, on average, 23% lower than they were at the peak. Focusing marketing dollars on those people with the highest propensity to buy has always been a challenge but in this market there are identifiable targets. “Boomerangs” are people who owned real estate in the past but are currently renting and likely to come back into the market.Marketing to qualified former homeowners would provide a solid return on investment. People renting single family houses are indicating a lifestyle preference that can be marketed to. Newly-formed households are also profitable targets. The housing market, at long last, appears to be finally turning the corner and normalizing. Experian’s expertise in identifying the right consumers can help lenders to pinpoint the right people on whom marketing dollars should be invested to realize the highest level of return. Click here to learn more.

Cont. Understanding Gift Card Fraud By: Angie Montoya In part one, we spoke about what an amazing deal gift cards (GCs) are, and why they are incredibly popular among consumers. Today we are going to dive deeper and see why fraudsters love gift cards and how they are taking advantage of them. We previously mentioned that it’s unlikely a fraudster is the actual person that redeems a gift card for merchandise. Although it is true that some fraudsters may occasionally enjoy a latte or new pair of shoes on us, it is much more lucrative for them to turn these forms of currency into cold hard cash. Doing this also shifts the risk onto an unsuspecting victim and off of the fraudster. For the record, it’s also incredibly easy to do. All of the innovation that was used to help streamline the customer experience has also helped to streamline the fraudster experience. The websites that are used to trade unredeemed cards for other cards or cash are the same websites used by fraudsters. Although there are some protections for the customer on the trading sites, the website host is usually left holding the bag when they have paid out for a GC that has been revoked because it was purchased with stolen credit card information. Others sites, like Craigslist and social media yard sale groups, do not offer any sort of consumer protection, so there is no recourse for the purchaser. What seems like a great deal— buying a GC at a discounted rate— could turn out to be a devalued Gift card with no balance, because the merchant caught on to the original scheme. There are ten states in the US that have passed laws surrounding the cashing out of gift cards. * These laws enable consumers to go to a physical store location and receive, in cash, the remaining balance of a gift card. Most states impose a limit of $5, but California has decided to be a little more generous and extend that limit to $10. As a consumer, it’s a great benefit to be able to receive the small remaining balance in cash, a balance that you will likely forget about and might never use, and the laws were passed with this in mind. Unfortunately, fraudsters have zeroed in on this benefit and are fully taking advantage of it. We have seen a host of merchants experiencing a problem with fraudulently obtained GCs being cashed out in California locations, specifically because they have a higher threshold. While five dollars here and ten dollars there does not seem like it is very much, it adds up when you realize that this could be someone’s full time job. Cashing out three ten dollar cards would take on average 15 minutes. Over the course of a 40-hour workweek it can turn into a six-figure salary. At this point, you might be asking yourself how fraudsters obtain these GCs in the first place. That part is also fairly easy. User credentials and account information is widely available for purchase in underground forums, due in part to the recent increase in large-scale data breaches. Once these credentials have been obtained, they can do one of several things: Put card data onto a dummy card and use it in a physical store Use credit card data to purchase on any website Use existing credentials to log in to a site and purchase with stored payment information Use existing credentials to log in to an app and trigger auto-reloading of accounts, then transfer to a GC With all of these daunting threats, what can a merchant do to protect their business? First, you want to make sure your online business is screening for both the purchase and redemption of gift cards, both electronic and physical. When you screen for the purchase of GCs, you want to look for things like the quantity of cards purchased, the velocity of orders going to a specific shipping address or email, and velocity of devices being used to place multiple orders. You also want to monitor the redemption of loyalty rewards, and any traffic that goes into these accounts. Loyalty fraud is a newer type of fraud that has exploded because these channels are not normally monitored for fraud— there is no actual financial loss, so priority has been placed elsewhere in the business. However, loyalty points can be redeemed for gift cards, or sold on the black market, and the downstream affect is that it can inconvenience your customer and harm your brand’s image. Additionally, if you offer physical GCs, you want to have a scratch off PIN on the back of the card. If a GC is offered with no PIN, fraudsters can walk into a store, take a picture of the different card numbers, and then redeem online once the cards have been activated. Fraudsters will also tumble card numbers once they have figured out the numerical sequence of the cards. Using a PIN prevents both of these problems. The use of GCs is going to continue to increase in the coming years— this is no surprise. Mobile will continue to be incorporated with these offerings, and answering security challenges will be paramount to their success. Although we are in the age of the data breach, there is no reason that the experience of purchasing or redeeming a gift card should be hampered by overly cautious fraud checks. It’s possible to strike the right balance— grow your business securely by implementing a fraud solution that is fraud minded AND customer centric. *The use of GC/eGC is used interchangeably

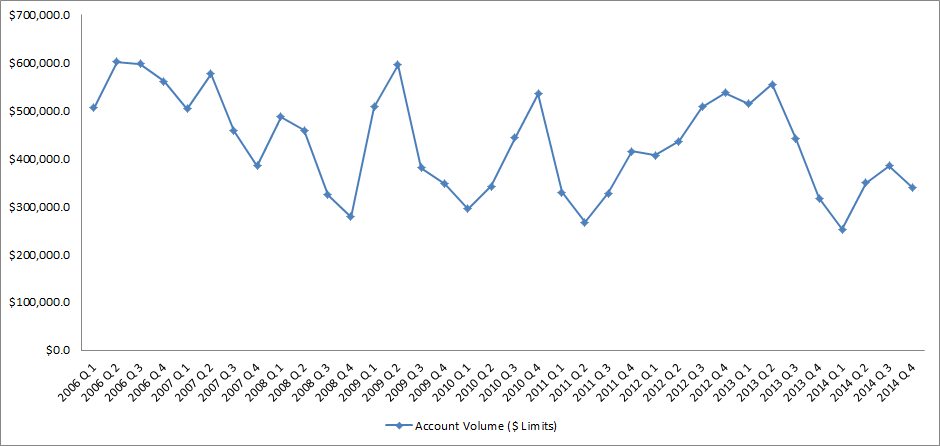

End-of-Draw approaching for many HELOCs Home equity lines of credit (HELOCs) originated during the U.S. housing boom period of 2006 – 2008 will soon approach their scheduled maturity or repayment phases, also known as “end-of-draw”. These 10 year interest only loans will convert to an amortization schedule to cover both principle and interest. The substantial increase in monthly payment amount will potentially shock many borrowers causing them to face liquidity issues. Many lenders are aware that the HELOC end-of-draw issue is drawing near and have been trying to get ahead of and restructure this debt. RealtyTrac, the leading provider of comprehensive housing data and analytics for the real estate and financial services industries, foresees this reset risk issue becoming a much bigger crisis than what lenders are expecting. There are a large percentage of outstanding HELOCs where the properties are still underwater. That number was at 40% in 2014 and is expected to peak at 62% in 2016, corresponding to the 10 year period after the peak of the U.S. housing bubble. RealtyTrac executives are concerned that the number of properties with a 125% plus loan-to-value ratio has become higher than predicted. The Office of the Comptroller of the Currency (OCC), the Board of Governors of the Federal Reserve System, the Federal Deposit Insurance Corporation, and the National Credit Union Administration (collectively, the agencies), in collaboration with the Conference of State Bank Supervisors, have jointly issued regulatory guidance on risk management practices for HELOCs nearing end-of-draw. The agencies expect lenders to manage risks in a disciplined manner, recognizing risk and working with those distressed borrowers to avoid unnecessary defaults. A comprehensive strategic plan is vital in order to proactively manage the outstanding HELOCs on their portfolio nearing end-of-draw. Lenders who do not get ahead of the end-of-draw issue now may have negative impact to their bottom line, brand perception in the market, and realize an increase in regulatory scrutiny. It is important for lenders to highlight an awareness of each consumer’s needs and tailor an appropriate and unique solution. Below is Experian’s recommended best practice for restructuring HELOCs nearing end-of-draw: Qualify Qualify consumers who have a HELOC that was opened between 2006 and 2008 Assess Viability Assess which HELOCs are idea candidates for restructuring based on a consumer’s Overall debt-to-income ratio Combined loan-to-value ratio Refine Offer Refine the offer to tailor towards each consumer’s needs Monthly payment they can afford Opportunity to restructure the debt into a first mortgage Target Target those consumers most likely to accept the offer Consumers with recent mortgage inquiries Consumers who are in the market for a HELOC loan Lenders should consider partnering with companies who possess the right toolkit in order to give them the greatest decisioning power when restructuring HELOC end-of-draw debt. It is essential that lenders restructure this debt in the most effective and efficient way in order to provide the best overall solution for each individual consumer. Revamp your mortgage and home equity acquisitions strategies with advanced analytics End-of-draw articles

By: Kyle Enger, Executive Vice President of Finagraph Small business remains one of the largest and most profitable client segments for banks. They provide low cost deposits, high-quality loans and offer numerous cross-selling opportunities. However, recent reports indicate that a majority of business owners are dissatisfied with their banking relationship. In fact, more than 33 percent are actively shopping for a new relationship. With limited access to credit after the worst of the financial crisis, plus a lack of service and attention, many business owners have lost confidence in banks and their bankers. Before the financial crisis, business owners ranked their banker number three on the list of top trusted advisors. Today bankers have fallen to number seven – below the medical system, the president and religious organizations, as reported in a recent Gallup poll, “Confidence in Institutions.” In order to gain a foothold with existing clients and prospects, here is a roadmap banks can use to build trust and effectively meet the needs to today’s small business client. Put feet on the street. To rebuild trust, banks need to get in front of their clients face to face and begin engaging with them on a deeper level. Even in the digital age, business customers still want to have face-to-face contact with their bank. The only way to effectively do that is to put feet on the street and begin having conversations with clients. Whether it be via Skype, phone calls, text, e-mail or Twitter – having knowledgeable bankers accessible is the first step in creating a trusting relationship. Develop business acumen. Business owners need someone who is aware of their pain points, can offer the correct products according to their financial need, and can provide a long-term plan for growth. In order to do so, banks need to invest in developing the business and relationship acumen of their sales forces to empower them to be trusted advisors. One of the best ways to launch a new class of relationship bankers is to start investing in educational events for both the bankers and the borrowers. This creates an environment of learning, transparency and growth. Leverage technology to enhance client relationships. Commercial and industrial lending is an expensive delivery strategy because it means bankers are constantly working with business owners on a regular basis. This approach can be time-consuming and costly as bankers must monitor inventory, understand financials, and make recommendations to improve the financial health of a business. However, if banks leverage technology to provide bankers with the tools needed to be more effective in their interactions with clients, they can create a winning combination. Some examples of this include providing online chat, an educational forum, and a financial intelligence tool to quickly review financials, provide recommendations and make loan decisions. Authenticate your value proposition. Business owners have choices when it comes to selecting a financial service provider, which is why it is important that every banker has a clearly defined value proposition. A value proposition is more than a generic list of attributes developed from a routine sales training program. It is a way of interacting, responding and collaborating that validates those words and makes a value proposition come to life. Simply claiming to provide the best service means nothing if it takes 48 hours to return phone calls. Words are meaningless without action, and business owners are particularly jaded when it comes to false elevator speeches delivered by bankers. Never stop reaching out. Throughout the lifecycle of a business, its owner uses between 12 and 15 bank products and services, yet the national product per customer ratio averages around 2.5. Simply put, companies are spreading their banking needs across multiple organizations. The primary cause? The banker likely never asked them if they had any additional businesses or needs. As a relationship banker to small businesses, it is your duty to bring the power of the bank to the individual client. By focusing on adding value through superior customer experience and technology, financial institutions will be better positioned to attract new small business banking clients and expand wallet share with existing clients. By implementing these five strategies, you will create closer relationships, stronger loan portfolios and a new generation of relationship bankers. To view the original blog posting, click here. To read more about the collaboration between Experian and Finagraph, click here.

By: Linda Haran Complying with complex and evolving capital adequacy regulatory requirements is the new reality for financial service organizations, and it doesn’t seem to be getting any easier to comply in the years since CCAR was introduced under the Dodd Frank Act. Many banks that have submitted capital plans to the Fed have seen them approved in one year and then rejected in the following year’s review, making compliance with the regulation feel very much like a moving target. As a result, several banks have recently pulled together a think tank of sorts to collaborate on what the Fed is looking for in capital plan submissions. Complying with CCAR is a very complex, data intensive exercise which requires specialized staffing. An approach or methodology to preparing these annual submissions has not been formally outlined by the regulators and banks are on their own to interpret the complex requirements into a comprehensive plan that will ensure their capital plans are accepted by the Fed. As banks work to perfect the methodology used in this exercise, the Fed continues to fine tune the requirements by changing submission dates, Tier 1 capital definitions, etc. As the regulation continues to evolve, banks will need to keep pace with the changing nature of the requirements and continually evaluate current processes to assess where they can be enhanced. The capital planning exercise remains complex and employing various methodologies to produce the most complete view of loss projections prior to submitting a final plan to the Fed is a crucial component in having the plan approved. Banks should utilize all available resources and consider partnering with third party organizations who are experienced in both loss forecasting model development and regulatory consulting in order to stay ahead of the regulations and avoid a scenario where capital plan submissions may not be accepted. Learn how Experian can help you meet the latest regulatory requirements with our Loss Forecasting Model Services.