Latest Posts

There are some definite misunderstandings about the lifecycle of fraud. The very first phase is infection – and regardless of HOW it happens, the victim’s machine has been compromised. You may have no knowledge of this fact and no control. All of that compromised data is off in the ether and has been sold. The next phase is to make sure that the next set of fraudsters can validate those compromised accounts and make sure they got their money’s worth. It’s only at the last phase – theft – that any money movement occurs. We call this out because there are a lot of organizations out there who have built their entire solution on this last phase. We would say you are about two weeks too late as the crime actually began much earlier. So how can you protect your organization? Here are five take-aways to consider: User / device trust. Do this user and device share a history? Has this user seen of been associated with this device historically? It may not be fraud but it is something we watch for. User / device compatibility. Does the user align with devices they’ve used in the past? What are the attributes of the device with respect to user preferences, profile and so on. Device hostility. Look at its behavior across your ecosystem. How many identities has it been associating with? Is it associated with a number of personal attributes or focused on risky activities? Malware. Does this device configuration suggest malware? Because we have information about the device itself, we can show that it’s been infected. Device reputation. Has this device been associated with previous crimes? There are some organizations who have built their entire solution around device reputation. We believe this is interesting to include but it’s more important to look at everything in the context across your entire ecosystem rather that focus on just one area. Want to learn more? Listen to this on-demand webinar “Where the WWW..wild things are – when good data is exploited for fraudulent gain”.

FICO, a leading predictive analytics and decision management software company, has partnered with 41st Parameter®, a part of Experian® and a leader in securing online relationships, to fight fraud on card-not-present (CNP) transactions, the top source of payment card fraud today, while letting more genuine transactions proceed in real time. FICO is integrating 41st Parameter’s TrustInsight™ with the FICO® Falcon® Platform, which protects 2.5 billion card accounts and is used by more than 9,000 financial institutions worldwide. Authenticating the device being used in a transaction provides yet another layer of detection to the Falcon Platform, which includes proprietary analytics based on more than 30 patents. 41st Parameter’s TrustInsight™ solution provides a real-time analysis of a transaction, crowd-sourced from a network of merchants, that produces a TrustScore™ indicating whether the transaction is likely to be genuine and should be approved. TrustInsight helps reduce the number of “false positives,” or good transactions that are declined or investigated by the card issuer. The TrustScore, integrated with the FICO Falcon Fraud Manager Platform, provides a link between data the merchant knows and data the issuer knows to enable issuers to utilize additional information that is not currently available in their fraud detection process, including the identification of a cardholder’s “trusted devices.” Read the entire release here.

During last week’s live webinar, David Britton, online fraud industry expert and vice president, industry solutions at 41st Parameter said this: “At 41st Parameter, we begin our days somewhat differently. We believe that the internet was never built for security in mind. We also assume that all user data has been stolen. Every bit of consumer data has been compromised. Why? It puts us on a much more heightened state of awareness to help mitigate the type of environment we work in. We also believe that we are not just fighting against naturally evolving organisms. Rather, we are combating a very sophisticated and powerfully-motivated individuals who are highly creative.” During the 45-minute live webinar, Britton also provided five distinct actions that businesses can take to help protect their organizations as well as real-world strategies for preventing and detecting fraud online AND maintaining a positive online experience for valued customers. Want to learn more? Link to the on demand webinar here and stay tuned for next month’s panel where we will focus on the surveillance and validation of data prior to theft. Viewers will be armed with tactics that they can leverage in their own organizations.

By: Mike Horrocks Living just outside of Indianapolis, I can tell you that the month of May is all about "The Greatest Spectacle in Racing", the Indy 500. The four horsemen of the apocalypse could be in town, but if those horses are not sponsored by Andretti Racing or Pennzoil – forget about it. This year the race was a close one, with three-time Indy 500 winner, Helio Castroneves, losing by .06 of a second. It doesn’t get much closer. So looking back, there are some great lessons from Helio that I want to share with auto lenders: You have to come out strong and with a well-oiled machine. Castroneves lead the race with no contest for 38 laps. You cannot do that without a great car and team. So ask yourself - are you handling your auto lending with the solution that has the ability to lead the market or are you having to go to the pits often, just to keep pace? You need to stay ahead of the pack until the end. Castroneves will be the first to admit that his car was not giving him all the power he wanted in the 196th lap. Now remember there are only 200 laps in the race, so with only four laps to go, that is not a good time to have a hiccup. If your lending strategy hasn't changed "since the first lap", you could have the same problem getting across the the finish line? Take time to make sure your automated scoring approach is valid, question your existing processes, and consider getting an outside look from leaders in the industry to make sure your are still firing on all cylinders. Time kills. Castroneves lost by .06 seconds. That .06 of a second means he was denied access into a very select club of four time winners. That .06 of a second means he does not get to drink that coveted glass of milk. If your solution is not providing your customers with the fastest and best credit offers, how many deals are you losing? What exclusive club of top auto lenders are you being denied access to? Second place is no fun. If you're Castroneves, there's no substitute for finishing first at the Indianapolis Motor Speedway. Likewise, in today’s market, there is more need than ever to be the Winner’s Circle. Take a pit stop and check out your lending process and see how you're performing against your competitors and in the spirit of the race – “Ladies and gentlemen, start your engines!”

According to the latest Experian-Oliver Wyman Market Intelligence Report, mortgage originations for Q1 2014 decreased by 53 percent over Q1 2013 - $235 billion versus $515 billion, respectively.

Although 60-day automotive loan delinquencies fell 1.7 percent at a national level when comparing Q1 2014 to Q1 2013, twenty-two states actually experienced a delinquency increase.

Experian's most recent Credit Trends study analyzing current debt levels and credit scores in the top 20 major U.S. metropolitan areas found that Detroit, Michigan, residents have the lowest average debt ($23,604) and Dallas, Texas, residents have the highest average debt ($28,240).1

Surag Patel, vice president of global product management for 41st Parameter, led a panel discussion on Digital Consumer Trust with experts from the merchant community and financial services industry at this week’s CNP Expo. During the hour-long session, the expert panel – which included Patel, Jeff Muschick of MasterCard and TJ Horan from FICO – discussed primary research explaining the $40 billion in revenue lost each year to unwarranted CNP credit-card declines and what businesses can do to avoid it. Patel began the Thursday morning session by asking the audience how many have bought something online—of course, everyone raised their hands. He then asked how many had been declined—about half the hands stayed up. “Of those with your hands still up,” he said, “how many of you are fraudsters?” The audience chuckled, but the reality of false positives and unnecessary declines is no laughing matter. Unnecessary declines cause lost revenue and damage the customer relationship with merchants, banks and card issuers. The panel cited a 41st Parameter survey of 1,000 consumers and described their responses to the question, what do you do after you get declined? While many would call the card issuer or try a different payment method, one in six would actually skip the purchase altogether, one in ten would purchase from a different online merchant, and one in twelve would go buy the item at a brick-and-mortar store. So regardless of who the customer blames, ultimately, when a good purchase is declined, everybody loses. Jeff Muschick, who works in fraud solutions for MasterCard, spoke about the need for a solid rules engine, and recommended embracing new tools as they emerge to enhance their fraud prevention strategy. He acknowledged that for smaller merchants, keeping up with fraudsters can be incredibly taxing, and often even at larger organizations, fraud departments are understaffed. For that reason, he highlighted a tool that many fraud prevention strategies are leaving on the table, and that’s cooperation: “We talk about collaboration, but it’s not as gregarious as we’d like it to be.” TJ Horan, who is responsible for fraud solutions at FICO, encouraged merchants, banks, and card issuers to mitigate the damage of good declines through customer education. He observed that “if there was a positive thing to come out of the Target breach (and that’s a big ‘if’), it is an increase in general consumer awareness of credit-card fraud and data protection.” This helps inform customers’ attitudes when they are declined, because they realize it is probably a measure being taken for their own protection, and they are likely to be more forgiving. Click here for more information about TrustInsight and how online merchants can increase sales by approving more trusted transactions.

Today, our TrustInsight division announced a major milestone at this year’s CNP Expo (CardNotPresent). TrustInsight provides reliable TrustScores for a significant portion of US digital consumers leveraging insights from150 of the top online retailers in the US. Now retailers, banks and credit card companies can confidently approve more legitimate CNP transactions. As Surag Patel, vice president, global product management, put it, “We have been working with some of the largest online merchants to help them determine the trustworthiness of a customer during a transaction to help let more good transactions through. The result has been a sharp increase in top-line revenue that can be measured in the tens of millions of dollars.” Patel is leading a panel discussion on Digital Consumer Trust at CNP Expo 2014 on Thursday, May 22 with experts from the merchant community and financial services industry. During the hour-long session, the expert panel will discuss primary research explaining the $40 billion in revenue lost each year to unwarranted CNP credit-card declines and what businesses can do to avoid it. Read the full release here.

Following a full year of steady improvement, small-business credit conditions stumbled during the first quarter of 2014.

As part of its guidance, the Office of the Comptroller of the Currency recommends that lenders perform regular validations of their credit score models in order to assess model performance.

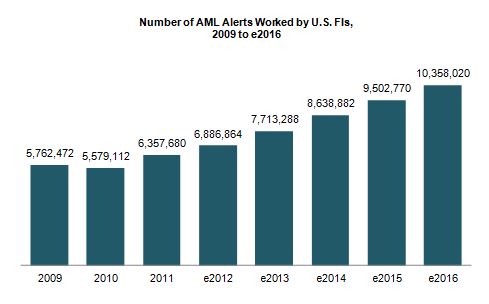

Julie Conroy - Research Director, Aite Group Finding patterns indicative of money laundering and other financial crimes is akin to searching for a needle in a haystack. With the increasing pressure on banks’ anti-money laundering (AML) and fraud teams, many with this responsibility increasingly feel like they’re searching for those needles while a combine is bearing down on them at full speed. These pressures include: Regulatory scrutiny: The high-profile—and expensive—U.S. enforcement actions that took place during the last couple of years underscore the extent to which regulators are scrutinizing FIs and penalizing those who don’t pass muster. Payment volumes and types increasing: As the U.S. economy is gradually easing its way into a recovery, payment volumes are increasing. Not only are volumes rebounding to pre-recession levels, but there have also been a number of new financial products and payment formats introduced over the last few years, which further increases the workload for the teams who have to screen these payments for money-laundering, sanctions, and global anti-corruption-related exceptions. Constrained budgets: All of this is taking place during a time in which top line revenue growth is constrained and financial institutions are under pressure to reduce expenses and optimize efficiency. Illicit activity on the rise: Criminal activity continues to increase at a rapid pace. The array of activity that financial institutions’ AML units are responsible for detecting has also experienced a significant increase in scope over the last decade, when the USA PATRIOT Act expanded the mandate from pure money laundering to also encompass terrorist financing. financial institutions have had to transition from activity primarily focused on account-level monitoring to item-level monitoring, increasing by orders of magnitude the volumes of alerts they must work (Figure 1) Figure 1: U.S. FIs Are Swimming in Alerts Source: Aite Group interviews with eight of the top 30 FIs by asset size, March to April 2013 There are technologies in market that can help. AML vendors continue to refine their analytic and matching capabilities in an effort to help financial insitutions reduce false positives while not adversely affecting detection rates. Hosted solutions are increasingly available, reducing total cost of ownership and making software upgrades easier. And many institutions are working on internal efficiency efforts, reducing vendors, streamlining processes, and eliminating the number of redundant efforts. How are institutions handling the increasing pressure cooker that is AML compliance? Aite Group wants to know your thoughts. We are conducting a survey of financial insitution executives to understand your pain points and proposed solutions. Please take 20 minutes to share your thoughts, and in return, we’ll share a complimentary copy of the resulting report. This data can be used to compare your efforts to those of your peers as well as to glean new ideas and best practices. All responses will be kept confidential and no institutions names will be mentioned anywhere in the report. You can access the survey here: SURVEY

When a data breach occurs, laws and industry regulations, dictate when and if you need to notify consumers whose data might have been compromised. However, many consumers would also probably argue that you’re morally obligated, to notify them of data loss; they want you to tell them of the breach and to do so in a courteous, straightforward manner. Because of this, a breach notification letter is an integral piece of a firm’s breach response as these often are the first inkling consumers have that their information may have been compromised, and their identities might be at risk. It’s imperative those letters be efficient, effective – and perhaps most importantly – humane. A 2014 study by the Ponemon Institute and Experian Data Breach Resolution indicates consumers feel there’s room for improvement in data breach notification letters. The survey polled people who had received a data breach notification letter. Sixty-seven percent of those surveyed said they want letters to better explain the risks and potential harms they may face as a result of the breach, 56 want the letter to disclose all the facts, and a third didn’t want the letter to “sugar-coat” the situation. A quarter wanted the letters to be more personal. The Experian Data Breach Resolution team has vast experience with breach notification letters and data breach notification regulations. In our experience, here are the five most common and egregious errors to avoid when sending a data breach notification letter: 1. Keeping the consumer in the dark about the details. Customers will want to know what information was compromised in the breach. Was it their Social Security number? A credit card number? Their home address? Consumers can’t protect themselves from further harm if they don’t know exactly what’s at risk. Don’t leave them guessing. Tell consumers exactly what information was compromised in the breach. 2. Speaking “legalese.” Reverting to legalese – highly complex verbiage largely understandable only to lawyers – is a defense mechanism for companies, and it doesn’t really help the consumer. Twenty-three percent of those polled by Ponemon said the letter they received would have been better if it had less legal or technical language. Keep letters short, factual and simply worded so that the average Joe or Jane can understand them. 3. Leaving out the ramifications and risks. It’s not enough to simply tell consumers they’ve been involved in a breach. It’s not even enough to tell them what information has been compromised. To truly empower them to protect themselves from further harm, you need to alert consumers to what those risks may be. Consider the type of data that was lost, then explain the risks that can be associated with that type of data loss. 4. Failing to offer an olive branch. Whether the breach was your fault or not, consumers will hold you responsible and they will feel they should get some kind of compensation for all the grief the breach will cause them. Providing breached customers with an identity protection product not only helps protect them, but it shields your company’s reputation, too. In the Ponemon study, 67 percent of consumers said they felt companies should offer some form of compensation – whether cash, product or service – to consumers caught in a data breach. Sixty-three percent said the company should offer them free identity theft protection and 58 percent wanted free credit monitoring. Interestingly, 43 percent also said a sincere and personal apology might help convince them to keep their business with the breached organization.. 5. Failing to seize the chance to rebuild trust. There’s no question that a data breach undermines customer trust. Some customers will leave a breached company. Among polled customers who remained with the breached company, inertia seemed a major factor in their decision not to go elsewhere; 67 percent said they stayed simply because it was too difficult to find someone else to offer the same products or services. Less than half (45 percent) said they stayed because they were happy with how the company handled the data breach. Breach letters are actually an opportunity to begin rebuilding trust. Explain to consumers what you’re doing to reduce the risk of future breaches, and how you’re taking steps to help protect them from further harm. Despite your best efforts, a data breach can occur. When it does, the data breach notification letter is your all-important point of first contact with affected consumers. Craft it well and the letter can be a valuable tool for mitigating reputation damage and rebuilding trust. Learn more from our Knowledge Center

As we discussed in our earlier Heartbleed post, there are several new vulnerabilities online and in the mobile space increasing the challenges that security professionals face. Fraud education is a necessity for companies to help mitigate future fraud occurrences and another critical component when assessing online and mobile fraud is device intelligence. In order to be fraud-ready, there are three areas within device intelligence that companies must understand and address: device recognition, device configuration and device behavior. Device recognition Online situational awareness starts with device recognition. In fraudulent activity there are no human users on online sites, only devices claiming to represent them. Companies need to be able to detect high-risk fraud events. A number of analytical capabilities are built on top of device recognition: Tracking the device’s history with the user and evaluating its trust level. Tracking the device across multiple users and evaluating whether the device is impersonating them. Maintaining a list of devices previously associated with confirmed fraud. Correlation of seemingly unrelated frauds to a common fraud ring and profiling its method of operation. Device configuration The next level of situational awareness is built around the ability to evaluate a device’s configuration in order to identify fraudulent access attempts. This analysis should include the following capabilities: Make sure the configuration is compatible with the user it claims to represent. Check out internal inconsistencies suggesting an attempt to deceive. Review whether there any indications of malware present. Device behavior Finally, online situational awareness should include robust capabilities for profiling a device’s behavior both within individual accounts and across multiple users: Validate that the device focus is not on activity types often associated with fraud staging. Confirm that the timing of the activities do not seem designed to avoid detection rules. By proactively managing online channel risk and combining device recognition with a powerful risk engine, organizations can uncover and prevent future fraud trends and potential attacks. Learn more about Experian fraud intelligence products and services from 41st Parameter, a part of Experian.

The discovery of Heartbleed earlier this year uncovered a large-scale threat that exploits security vulnerability in OpenSSL posing a serious security concern. This liability gave hackers access to servers for many Websites and put consumers’ credentials and private information at risk. Since the discovery, most organizations with an online presence have been trying to determine whether their servers incorporate the affected versions of OpenSSL. However, the impact will be felt even by organizations that do not use OpenSSL, as some consumers could reuse the same password across sites and their password may have been compromised elsewhere. The new vulnerabilities online and in the mobile space increase the challenges that security professionals face, as fraud education is a necessity for companies. Our internal fraud experts share their recommendation in the wake of the Heartbleed bug and what companies can do to help mitigate future occurrences. Here are two suggestions on how to prevent compromised credentials from turning into compromised accounts: Authentication Adopting layered security strategy Authentication The importance of multidimensional and risk-based authentication cannot be overstated. Experian Decision Analytics and 41st Parameter® recommend a layered approach when it comes to responding to future threats like the recent Heartbleed bug. Such methods include combining comprehensive authentication processes at customer acquisition with proportionate measures to monitor user activities throughout the life cycle. "Risk-based authentication is best defined and implemented in striking a balance between fraud risk mitigation and positive customer experience," said Keir Breitenfeld, Vice President of Fraud Product Management for Experian Decision Analytics. "Attacks such as the recent Heartbleed bug further highlight the foundational requirement of any online business or agency applications to adopt multifactor identity and device authentication and monitoring processes throughout their Customer Life Cycle." Some new authentication technologies that do not rely on usernames and passwords could be part of the broader solution. This strategic change involves the incorporation of broader layered-security strategy. Using only authentication puts security strategists in a difficult position since they must balance: Market pressure for convenience (Note that some mobile banking applications now provide access to balances and recent transactions without requiring a formal login.) New automated scripts for large-scale account surveillance. The rapidly growing availability of compromised personal information. Layered security "Layered security through a continuously refined set of ‘locks’ that immediately identify fraudulent access attempts helps organizations to protect their invaluable customer relationships," said Mike Gross, Global Risk Strategy Director for 41st Parameter. "Top global sites should be extra vigilant for an expected rush of fraud-related activities and social engineering attempts through call centers as fraudsters try to take advantage of an elevated volume of password resets." By layering security consistently through a continuously refined set of controls, organizations can identify fraudulent access attempts, unapproved contact information changes and suspicious transactions. Learn more about fraud intelligence products and services from 41st Parameter, a part of Experian.